In the wake of several prominent supply chain attacks, developing controls to secure the software supply chain has become a major focus for those in the cybersecurity space. Three recently-released frameworks— Google’s SLSA, Microsoft’s SCIM, and CNCF’s Software Supply Chain Best Practices— propose guidelines for ensuring software integrity and improving the overall security of software supply chains across the industry. This blog post will provide a brief overview of these three new frameworks, outline the common requirements, and explain how to satisfy them.

Overview of Supply Chain Security Frameworks

Microsoft’s Supply Chain Integrity Model, or SCIM for short, specifies an end-to-end system for validating arbitrary artifacts (software and hardware) in terms of supply chains whose integrity has been proven. SCIM defines minimum standards (without specifying implementation requirements) around the preparation, storage, distribution, consumption, validation and evaluation of arbitrary evidence about artifacts. In other words, SCIM describes principles and a proposed model for conveying evidence but does not address what evidence must be conveyed.

On the other hand, both the CNCF’s Software Supply Chain Best Practices and Google’s Supply-chain Levels for Software Artifacts follow an assurance-level approach for ensuring software integrity. CNCF lays out three assurance levels that software products can be produced at— low, medium, and high— while SLSA lays out four— SLSA 1 through SLSA 4. Both frameworks stress the importance of securing the source code, third-party dependencies, build systems, artifacts, and deployments, and both make use of automation, cryptographic attestations, and other controls to secure those assets and systems.

Vulnerabilities & Risks

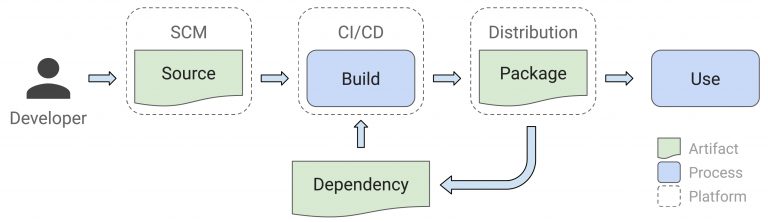

From a high level, a typical software delivery process looks like this:

A developer submits code changes to a source control repository, which triggers a build. The build service retrieves the source code from the repository and compiles the code. The binaries are signed and packaged. Lastly, the package is delivered to end-users or, in some cases, delivered to downstream projects that use that package in their software.

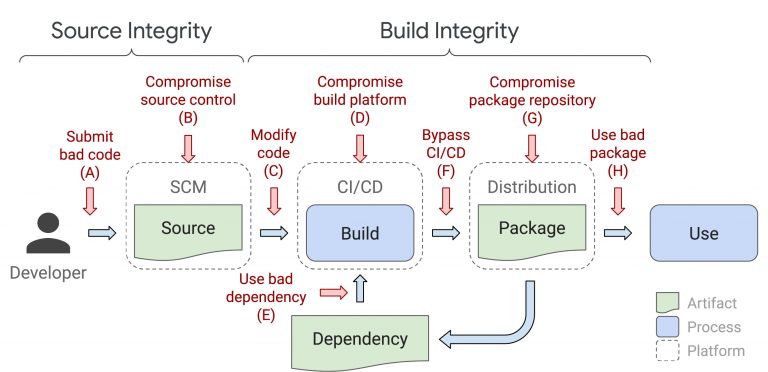

There are multiple attack vectors that threat actors can use to inject malware into legitimate software products. The following diagram shows all of the potential vulnerabilities:

Each of these 8 attack vectors have been exploited on real businesses, as documented by both the SLSA framework and the CNCF. When all of the SLSA 4 and the CNCF high-level requirements are satisfied, each of these attacks would have been prevented or detected very early on.

Meeting High Assurance Requirements

What follows is a summary of some of the frameworks’ high assurance requirements.

Version Control With Verified History

Every change to a code base must have an attribution to an authorized developer, who was authenticated through multiple factors, and a timestamp, to indicate when the change was made.

Additionally, every change to the source code must be tracked through a control system that keeps a record of previous versions of the code base. There must be a way to reference a particular version of and specific commit to the code base. In git, for example, this would be the repository URL, the branch/tag/ref, and the commit ID. Commits and versions of the code base must both be immutable.

Two-Person Secure Code Reviews

Every commit that goes into a final release must be reviewed by at least one other qualified reviewer. The code should be reviewed for correctness, security issues, need, quality, and so on.

Automated Builds In A Secure CI/CD Environment

Builds should never be completed manually on local workstations. Instead, the build process should be fully automated and performed in a build environment with the following attributes:

- Isolated – the build process must be unaffected by other builds, past or concurrent

- Hermetic – the build must declare all external dependencies and use only immutable references that can be independently verified

- Parameterless – nothing should affect build’s output, except for the source code

- Ephemeral – every build should take place in a container or VM that was created solely for that one particular build

Verified Reproducible Builds

In addition to the qualities described above, the build process should be verified reproducible. For a build to be reproducible, according to the reproducible builds website, “the build system needs to be made entirely deterministic: transforming a given source must always create the same result.” The same source code must always produce the same binary output when compiled, bit for bit.

A verified reproducible output is a slightly stronger requirement. The SLSA framework describes it as “using two or more independent build systems to corroborate the provenance of a build.”

In other words, for a build process to be verified reproducible, it must be deterministic, in that it always produces the exact same binary output from the exact same source code input, and two independent build servers must each perform a build of the same source code to ensure that the binaries match on both systems.

Downstream Verification

While the software supplier can verify the integrity of what it produces, the end user must perform its own independent verification. But how can the end-user do this? Verifying checksums only verifies that what was downloaded matches what the end-user was expecting to download, but does nothing to verify that the binaries were produced from the correct source code. If the end user has the source code and build instructions then it may be possible to perform the verification but this is an onerous task that will likely be skipped by many end users. Additionally, what about use cases where the source code is not made available to end users?

A more scalable approach is to use attestations that can be automatically verified by the end user’s device. Using this approach, the end user’s device can perform the verification in real-time during installation and/or runtime.

The obvious implementation that comes to mind is to leverage a repository of attestations that can be queried by the end user’s device. However, this solution on its own has several drawbacks:

- End-user devices must be updated to query the repository and then parse, interpret, and act on the response.

- Some end-user devices may not have network access to perform the querying.

- If a repository experiences downtime, this could impact the running applications or installation of software on remote devices.

- How would the device know the URL of the attestation repository to access?

The next obvious solution is to provide the attestation as part of the binaries. Depending on the approach, this could also have some drawbacks. For example, if the attestations are baked into the binaries, this would likely not be backwards compatible with existing systems that consume the binaries and would therefore require updates to all of those systems. If, instead, the binaries are delivered in a package where the package contains both the binaries and the attestations in a manner where they can be separated (e.g., everything included in a zip file), tools that download and use the binaries would have to be updated to expect this packaging format.

An alternative approach is to leverage a procedure that is already widely used, but to assign a stricter meaning to it. Code signing is used throughout the industry and we mostly take it to mean that the signed binaries were produced by the owner of the code signing key (i.e., the subject in the code signing certificate). Indeed, for standard code signing we don’t want to change that interpretation. However, there are different levels of code signing. For example, you can sign code with a standard code signing certificate or an Extended Validation (EV) certificate. In the PGP world (used, for example, by RPM and Debian) it is possible to have your public key signed by an auditor to assign it a higher level of trust via the web of trust model.

Following this approach, we can assign meaning to the higher levels of code signing that the signer has been audited to use a process that complies with SLSA Level 4 (or some other framework/level). The end result is that downstream tools don’t need to change and validation can be automated (and is mostly already implemented). Certificate authorities would need to modify their issuance process to include an audit of software vendors’ practices to ensure compliance before issuing certificates. For customers and tools that wish to further download build-specific attestation artifacts, an extension to the X.509v3 certificate can be used that provides the URL of the attestation artifact repository. In the PGP world, this URL can be added to the comment section of the PGP public key.

Build Systems & Cryptographic Services

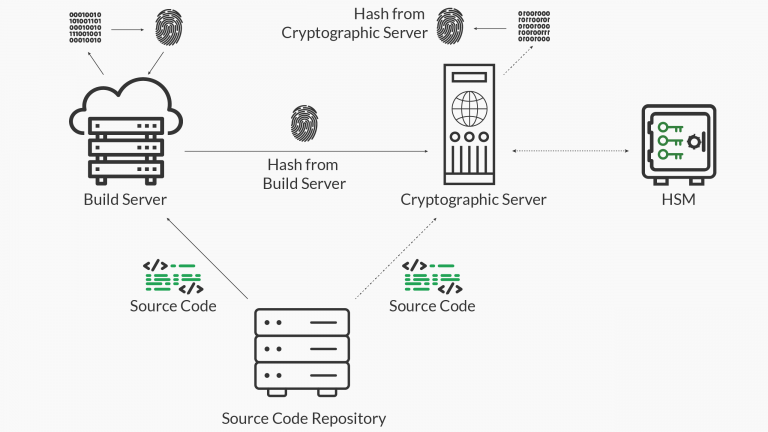

If the signature (and corresponding trusted certificate) on the binaries is an indication of the software supplier’s compliance, then the code signing process must verify compliance of each build before generating the signature. In a manual release environment, this may be doable via manual processes (albeit tedious and prone to human error), but we live in a world of automation and continuous integration. Therefore, an automated and performant method of verifying compliance is needed.

If the signing keys are compromised, there is no trust in the system, which means that the first priority is to properly protect the keys. Once protected, infrastructure is needed to securely and efficiently use the private keys for signing. We have written about this at great length and we encourage readers to read our previous posts on these topics. The summary is that a secure signing service that supports hash-signing, keys protected by a hardware security module (or key management system), and strong authentication/authorization is the optimum solution.

With the keys properly protected, the next challenge is satisfying the verified reproducible build requirement. To achieve this, the signing system can make use of one or more separate verification servers to independently verify the hash to sign as part of the code signing system. We have written about this in detail before, but the basic idea is shown below.

The video below shows what this process looks like in action.

Enhancing Performance

A natural concern is the performance cost of implementing verifiable builds in the CI/CD pipeline. However, with some careful architecting this process can be parallelized and even reduce the overall build time. To do this, we allow the code signing signature to be immediately generated and perform the verified reproducible build immediately thereafter. This allows the CI/CD pipeline to continue with its processes without having to wait for the verified reproducible build to finish. However, since the signature has already been generated, software suppliers must be sure to take immediate action if a build cannot be verified, as that could be a sign of an attack.

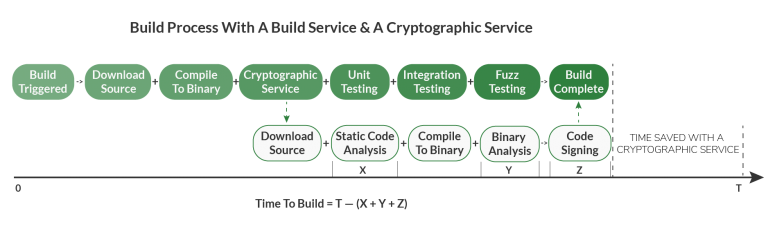

As an added bonus, additional tasks that the build server would typically be responsible for can be handled by the independent verifier server, once the build has been verified. This allows for parallelizing of tasks that would otherwise be done serially.

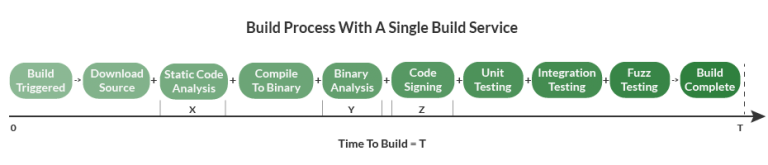

Here’s what a typical build process looks like when completed linearly on one server:

Here’s what the build process looks like when it is completed in parallel on two servers:

With tests and scans offloaded to the cryptographic service, the build completes faster than it would with just one server performing every task, one at a time.

The video below shows what this looks like in action.

Protecting the Source Code

With the features above implemented, software suppliers have strong assurances that only code originating from the source code repository will get signed. The next step is to ensure that the code in the repository is “good”. This translates to ensuring that only valid users are making changes to the repository and that the changes that are being made are valid.

We have written about this in detail before but ultimately this boils down to using strong authentication and authorization (e.g., multi-factor authentication, device authentication, commit signing, etc. combined with the principle of least privilege), having every commit reviewed by a qualified reviewer, and scanning the code and resulting binaries with automated tools (e.g., SAST, DAST, fuzzing, etc.). If a source code repository doesn’t support advanced authentication techniques, they can be transparently enforced by using key-based authentication with proxied keys.

Putting This Into Practice

While this may sound like a lot to accomplish, it is easy to do with GaraSign and deployments can be done with minimal intrusion to your existing environment.

Get in touch with the Garantir team to learn more about deploying a secure software supply chain process for your enterprise.