For most large enterprises, enforcing strong security controls—especially multi-factor authentication (MFA)—is a top priority. Microsoft famously reported that MFA can block over 99.9% of account compromise attacks. With numbers like that, implementing MFA seems like an obvious decision.

Yet deploying MFA across all enterprise resources is rarely simple. Large organizations have thousands of users and countless devices across multiple time zones. Many employees work remotely or from personal devices, adding further complexity.

Traditionally, MFA and device authentication are enforced at the application layer, which means modifying existing software and manually installing agents or plugins on every server. For enterprises running thousands of systems—email servers, web apps, file servers, databases, and more—this becomes a massive, long-term operational burden.

This post outlines a new approach to enforcing MFA and other security controls without modifying applications or touching server code. The key is enforcing controls at the transport (session) layer—before the client connects to the application—so downstream systems remain untouched while still benefiting from enhanced security.

Types of Security Controls

MFA is one of the most widely known controls, but modern IAM/PAM programs rely on many others. A well-designed system should allow these controls to be enabled with a policy change, not custom software updates.

Below is a non-exhaustive list of security controls enterprises should be able to implement:

Multi-Factor Authentication (MFA)

Validate identity using two or more factors—something the user knows, has, or is.

Device Authentication

Ensure the device is recognized, compliant, and secure before granting access—especially critical for remote workers.

Just-in-Time Access (JIT)

Grant access only when needed and only for the required duration (e.g., SSH access to production servers only during approved maintenance windows).

Approval Workflows

Require a quorum of approvers before access is granted. If any approver rejects, access is denied and alerts are triggered.

Notifications

Alert relevant personnel when privileged keys or sensitive resources are accessed. Useful for detecting suspicious behavior early.

Geolocation Restrictions

Allow access only from approved locations or during certain time windows. Block high-risk geographies outright.

Survey The Field

Before enforcing security controls across the enterprise, you must inventory:

-

All end-users and their permissions

-

All servers, applications, and systems that provide access to resources

This includes email servers, web apps, file servers, databases, source code repositories, build systems, production environments, and more. A complete, accurate list is essential.

Implement Key-Based Authentication

Different clients use different protocols to access resources:

-

Browsers → HTTPS/TLS

-

Remote Desktop → RDP

-

Dev tools / IDEs → SSH or TLS

-

Applications → Custom TLS-based connections

Despite these differences, they all share one thing:

Support for key-based authentication.

Key-based authentication provides several advantages over passwords:

-

Users often choose weak passwords

-

Password databases are centralized targets that can be compromised

-

Passwords are shared secrets—the server must store information that could be stolen

By contrast, key-based authentication uses unshared secrets. The user proves possession of a private key via a digital signature—without revealing the key. The private key can be stored securely in a key manager or Hardware Security Module (HSM).

Most critical enterprise protocols already use PKI under the hood, including TLS, SSH, and RDP.

Centrally Secure All Cryptographic Keys

To maximize security, private keys should not reside on endpoint devices. Instead, they should be centrally stored in:

-

A hardware security module (HSM)

-

A secure enterprise key manager

Centralizing keys offers several benefits:

-

Keys are protected by strong hardware-backed security

-

Administrators can set policy in one place

-

Easy permission management and revocation

-

Complete visibility and audit trails

-

Fine-grained, per-key enforcement of security controls

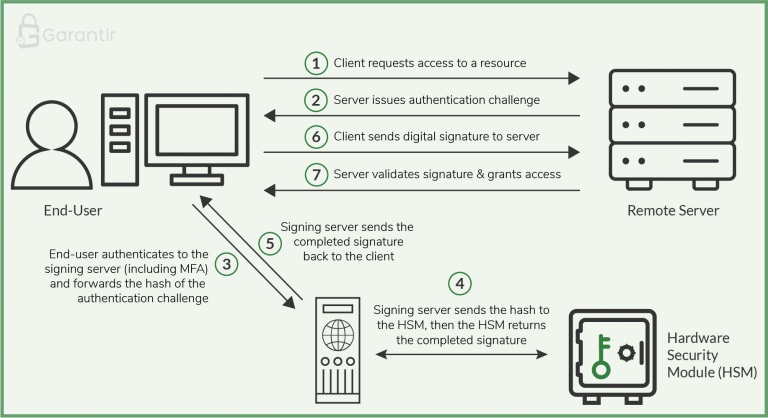

Authenticate Clients When They Request To Use A Key

Clients do not need direct access to private keys—only the digital signature produced by them.

Using this principle, organizations can deploy a dedicated cryptographic server in front of the HSM. This server:

-

Authenticates the end-user

-

Enforces policy (MFA, device checks, approvals, etc.)

-

Interfaces with the HSM to generate the digital signature

-

Returns the signature for the client to forward to the resource server

Workflow summary:

-

Client requests access to a resource

-

Resource server sends a challenge

-

Client forwards the challenge to the cryptographic server

-

Crypto server authenticates + evaluates controls

-

Crypto server signs via the HSM and returns the signature

-

Client forwards signature to resource server

-

Resource server verifies and grants access

Downstream systems remain unchanged—they simply receive and verify digital signatures as usual.

The Result: Enforce Security Controls Without Software Changes

By centralizing all cryptographic keys and implementing key-based authentication across the enterprise, MFA and other advanced controls can be enforced at the session layer, without modifying:

-

Servers

-

Applications

-

Protocols

-

Client software

Security teams can configure:

-

MFA

-

Device authentication

-

Approval workflows

-

JIT access

-

Notifications

-

And more

…all through centralized policy.

This approach also greatly simplifies audits, monitoring, and compliance, since all key usage flows through one control point.

Reach out to the Garantir team to learn more about deploying this architecture in your environment.