In a previous blog post, we described how a properly implemented code signing system can independently verify that the code being signed matches the code in the source control repository, via a feature called automated hash validation. We further showed how to do all of this without impeding performance.

When such a code signing solution is deployed, attackers are forced to insert their malicious code into the source control repository (thereby making it visible to the organization) and hope it bypasses the analyzer tools to be signed along with the rest of the legitimate code.

This post describes additional controls that an enterprise can put in place to prevent and detect attackers from inserting malicious code into the source control repository. While topics such as developer training, defining security requirements, choosing a source control repository, etc., are important and relevant, they are out of scope for this post. We will cover these topics in a future post.

Preventing Attacks: Securely Committing Code

Imagine that you’re a developer and you are ready to commit your code to the source control repository. The first thing you must do is connect and authenticate to the repository. This is the first, and possibly most important, line of defense against code being inserted by a malicious party.

Just as the industry is moving towards passwordless authentication for web applications, SSH, and more, password-based authentication should be avoided for access to the source control repository. Instead, a more robust approach that employs multi-factor authentication, device authentication, and behavior analysis should be employed. This forces attackers to compromise a trusted device, plus first and second factor authentication credentials, all while avoiding alerts for abnormal behavior, in order to insert their malicious code into the repository. Alternatively, attackers could get help from an actual employee.

At this point, you may be thinking that this sounds great but doesn’t seem feasible unless the source control repositories support these security controls and are able to integrate them into the clients. While that is true, the repositories and clients do already support these features, albeit in a not-so-obvious way.

Most source control repositories support key-based authentication. For example, Git supports authenticating via SSH keys. While the default approach is to store the private key on the user’s workstation, a more secure approach is to secure the key on a separate device and use it in a remote manner. In other words, the private key never touches the user’s workstation. Instead, the key is used to generate signatures from within a secure hardware device when authentication is being performed. By doing this, the external service managing the private key can enforce the additional security controls (multi-factor authentication, device authentication, and behavior analysis), all without requiring any changes to the source control repository. Here’s what it looks like in action:

The next step is authorization – that is, verifying that the user is allowed to commit to the repository. Different repositories support different permission models but, regardless of what permissions are available, the principle of least privilege should be applied. Developers should only be able to read from and write to the repositories they need to access. If a developer moves to a new project, their permissions should be modified accordingly (i.e., permissions against the old project should be removed or downgraded). Users that only need to pull from repositories (e.g., CI/CD build servers) should have read-only access to the repositories they need, and nothing more.

Some repositories, such as Git, support signing commits. If you are using a repository that supports this feature, you should enforce it for all team members. Similar to the method described above for key-based authentication, the signing keys should be stored and managed by a secure signing service where the key never touches the end-user’s workstation. The same advanced security controls (multi-factor authentication, device authentication, and behavior analysis) can be applied, as necessary.

Most, if not all, repositories allow developers to provide a comment along with the commit. This is a text (or “string”) value that allows the developer to provide context on what is being committed. Since all development activity should trace back to a need for the code change (e.g., requirement, bug fix, etc.), the comment should reference some form of identifier (e.g., requirement number, bug ID, etc.). If your repository supports pre-commit hooks, you can use these to enforce this practice. Otherwise, you can check it as part of the code review process (more on this below).

Detecting Attacks: The Anatomy of a Build

Once you’ve implemented the steps listed above, an attacker would need to go to extraordinary lengths or have insider access in order to commit code to the repository. If this happens, the goal is to detect the issue as soon as possible. Luckily, the CI/CD pipeline offers many opportunities to catch malicious, or otherwise undesired, code.

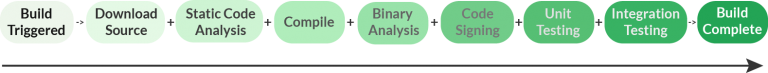

In a standard CI/CD environment, every code commit to the repository triggers what is known as a build. The build server will download the new source code, compile it (and perform other building activities), and run a set of tests against the binaries (and, sometimes, the source code). Every environment can be configured differently, but below are some recommendations to help detect offending code. If these items seem like they would significantly slow down your pipeline, keep in mind that they can be sped up by using post-sign hash validation, as described in our automated hash validation post.

Unit & Integration Testing

Both unit and integration testing should be employed to ensure that the software functions correctly after every commit. The testing shouldn’t be limited to validating positive scenarios; negative testing should be included at both the unit and integration levels to ensure the code can properly handle unwanted input and behavior. Verification of these tests should also include log analysis to ensure that sensitive data isn’t being logged and errors aren’t being ignored (e.g., by poor practices such as logging and swallowing exceptions).

Scanning

Multiple forms of scanning, including static, dynamic, and shape code analysis, as well as antivirus scanning, should be run on the code to look for issues. There are many third party tools, such as SonarQube, Fortify, Contrast, Visual Studio Analyzers, SpotBugs, Microsoft Defender, and more, that can be used for scanning. If picking the right ones for your environment seems like a daunting task, there are products such as Metadefender that can perform scanning using multiple antivirus engines.

Fuzzing

Fuzzing should be used to validate that the software behaves correctly (and safely) when provided invalid, unexpected, or random inputs. At a minimum, the software should be validated to not crash, leak memory, fail built-in assertions, or otherwise go into a bad state. Fuzzing can be used in combination with unit and integration testing as well as dynamic code analysis.

Non-Production Signing

Since it is likely the case that the code in the build has not yet been reviewed (see below), the build should not be signed with the production code signing key. Instead, the build should be signed with a test key and the test environment should be configured to trust the test key.

In the case of X.509 certificates (i.e., the certificates used for signing code on Windows, macOS, Java, etc.), this requires issuing the signing certificate from an internal PKI and importing the internal CA’s certificate(s) into the trust stores on the test systems. In the case of GPG keys (i.e., what is used for signing RPM and Debian packages), this requires importing the GPG public key as trusted on the test systems.

The test signing key should still be secured in an HSM and properly managed, as compromise of it can lead to compromise of the test environments. To further reduce that risk, the internal PKI should only be trusted on the minimum number of computers and devices as possible. For example, if there is an internal enterprise PKI that is trusted on every domain-joined computer for issuing machine and end-user certificates, consider using a different PKI for issuing the code signing certificate. The reason for this is to minimize the blast radius should something malicious get signed by the build server.

Businesses need a secure code signing system that doesn’t reduce the tempo of day-to-day operations. Learn how to design and deploy such a code signing solution in this post.

Detecting Attacks: The Human Factor

Automated processes are great, but they won’t catch everything, especially advanced attacks by nation-states (or other attackers with lots of resources) and crafty insider threats. An important manual line of defense is code review.

At least one independent and well-qualified developer should review every commit that goes into the final release. The code should be reviewed for correctness, security issues, need, quality, and so on. Including this as part of the release process helps prevent against an insider attack (i.e., where an authorized developer is the one committing the malicious code to the repository), provided the reviewers aren’t all part of the attack.

Some teams are hesitant to perform code reviews on all commits because the time it takes to perform the review is considered too costly. However, the cost of a software defect increases the longer the company waits to resolve it, so the code review process is likely less expensive than one might imagine. Additionally, code reviews are a great way to train new developers joining the team (but the review should also be performed by someone of equal or more senior ranking, whenever possible).

The Million Dollar Question

The natural question right now is whether the techniques described in this blog post, as well as the automated hash validation post, would have helped to thwart the recent SolarWinds supply chain attack.

As a quick aside, it is important to note that SUNBURST was a very sophisticated attack launched by well-resourced nation-state attackers, and many companies could have (and may have) fallen victim to it. We mention it here due to its relevance to the topic of this post, not to imply that SolarWinds or any other company had insufficient processes or procedures in place.

First, let’s state some important facts we know about the SolarWinds attack (thanks to the great write-ups by ReversingLabs and FireEye, as well as the information provided by SolarWinds):

- The attackers were able to get the build process to sign something that didn’t match what was in the repository.

- The source code for the malware was formatted in a manner consistent with the rest of the codebase.

- The source code for the malware contained Base64 encoded compressed strings.

- The source code for the malware contained unusual components for a .NET application such as references to kernel32.dll, using WMI to enumerate system information, enumerating and tampering account privileges, tampering with system shutdown, and more.

- The binaries were signed within a minute of being compiled.

- The malware stayed dormant for up to two weeks before retrieving and executing commands.

- The malware used multiple obfuscated blocklists to identify and evade forensic tools and antivirus software.

Fact #1 states that the build server signed something that does not match what is in the source control repository. With the automated hash validation technique in place, this could have been outright prevented (with pre-sign validation) or quickly detected (with post-sign validation).

For educational purposes, let’s assume that the source control repository was compromised and evaluate how automated hash validation would have performed. That is, we’re assuming the attackers were able to commit their malicious source code to the repository. With the strong authentication mechanisms described above in place, this would require the attackers to either (a) compromise a trusted device, steal first and second factor authentication credentials, and evade abnormal behavior detection, or (b) get help from an employee who has access to the repository.

Once committed to the repository, the build server would request to sign the code, which would trigger automated hash validation. The validation process would confirm that the code it is signing matches the code in the repository, so validation would complete successfully (i.e., no issue would be detected despite the presence of malware).

The next line of defense is the scanners and analyzers that the hash validation process can trigger. It is not clear whether these would have caught the attack, since many security vendors had to update their signature definitions after the SolarWinds attack became public knowledge. Given this information, plus facts 2 and 3 stated above, we will give the benefit of the doubt to the attackers and assume they would have remained undetected, with the caveat that there may be some scanning tools out there that would have detected the issue.

Given facts 6 and 7, the build environment would have had to change its system date (even this can be bypassed if the malware uses an external service to get the time, such as an NTP server) and may have also needed to change its networking information and registry values in order to have a chance at triggering the malware to execute. As a result, it is very unlikely that the automated testing or fuzzing in the CI/CD pipeline would have caught the issue.

The last line of defense is the code review. While fact 2 means that the code blended in with the rest of the code in the repository stylistically, facts 3 and 4 would definitely raise red flags to any qualified reviewer. The reviewers would see which developer authored the code and question them on the use of hardcoded Base64 encoded strings (#3) and unusual privileges and components (#4). If the malware’s source code evaded code review, it would likely be the result of inadequate review work and/or the review being performed by other insider threats.

In summary, since the attackers were not able to compromise the source control repository, automated hash validation would have prevented or quickly detected the SUNBURST attack. We further believe that, with all the mechanisms described in these posts in place, it would be extraordinarily difficult for an attacker to commit malicious code to the repository and have it signed with the production signing key, without assistance from multiple insiders.

Allow us to be clear: Garantir does not have any reason to believe and is not insinuating that any SolarWinds employees assisted the attackers in any way or were otherwise aware of the attack as it took place. Our analysis above is based on the presumed use of automated hash validation, a technique that SolarWinds almost certainly was not using, and the ability of the attacker to commit malicious code to the repository, something that we know now didn’t happen in the SolarWinds attack.

Next Steps

OK, you’ve implemented automated hash validation, added every security test and scan to your CI/CD environment, and require code review on every code commit – you’re good to go now, right? Not yet. All these features mean very little if you don’t secure the software delivery, installation, update, and runtime processes and environments. Signing code has no value if the client doesn’t (properly) validate the signature. And while validating signatures is important, there is a lot more to it than just that – but we will save that for another post.

Looking to secure your build process with the techniques described here? Garantir can help. GaraSign implements automated hash validation for code signing and supports strong authentication to repositories and other endpoints, all with HSM-protected private keys. Easily enforce multi-factor authentication, device authentication, approval workflows, and more, with the same tools you use today. Get in touch with the Garantir team to learn more.